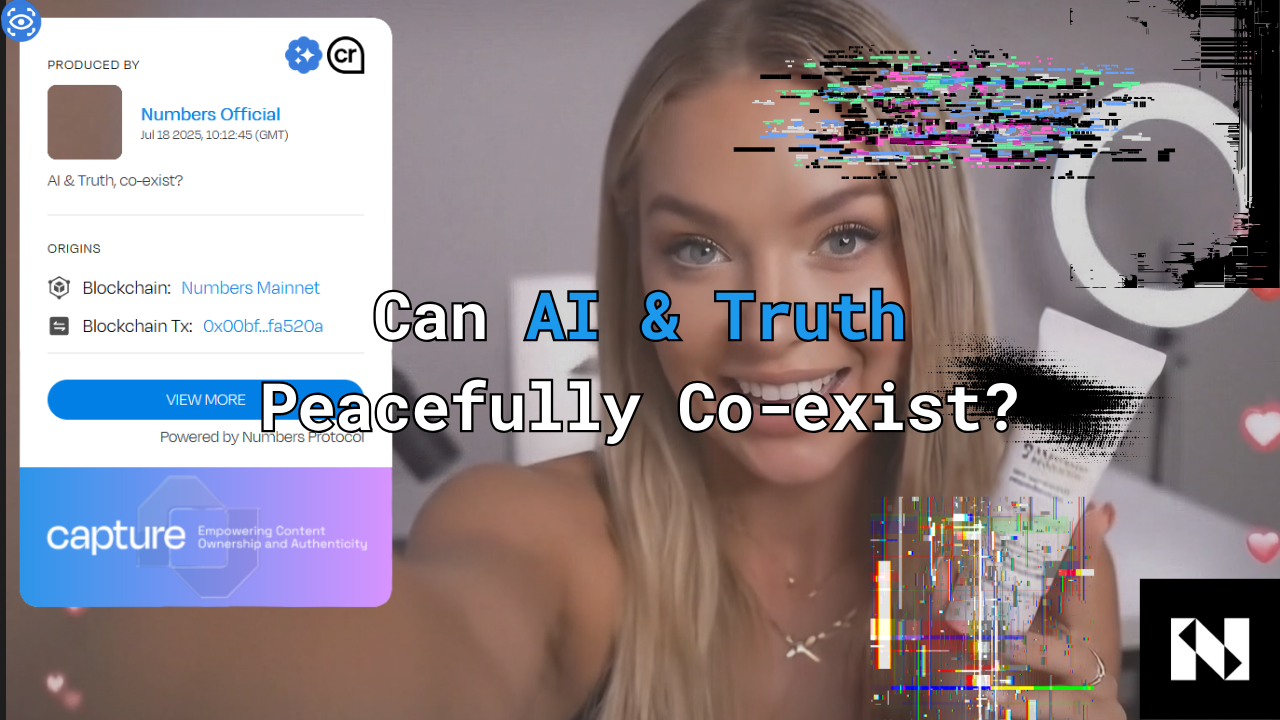

Note: This short video was created entirely with AI. View the full on‑chain provenance receipt to verify every media before you trust it.

https://asset.captureapp.xyz/bafybeiglu3dmzwbo7rietddp74hxhshdgd5vtiqux5qkbw5gim2if7m7fe

The phrase “AI v. Truth” makes great click‑bait, but it misses the point. AI, like every tool humans have ever built, from the printing press to the camera phone, is morally neutral. Hammers drive nails or break windows; the difference is always in the hands that wield them. Generative models are no different: they can accelerate discovery or turbo‑charge deception. Our goal at Numbers is to tip the scales decisively toward the first outcome.

The Real Risk Isn’t “Evil Machines”, It’s Frictionless Fabrication

When a model can spin up photorealistic evidence or authoritative‑sounding prose in seconds, bad actors get scale they never had before. That scale threatens:

- Civic Signals — Mass‑produced “astroturf” drowns out genuine public input.

- Market Integrity — A single fake image can swing billions in value before truth catches up.

- Everyday Trust — If any video might be fake, all videos become suspect.

Notice the pattern? The danger flows from human motives amplified by automation, not from malevolent code. Treating AI as inherently evil distracts us from building the guardrails that matter.

Provenance: Turning the Camera Lens Back on Itself

Instead of policing every output after it spreads, we embed authenticity at the moment of creation:

- Capture & Seal — Capture and ProofSnaprecords cryptographic metadata — time, place, device — right when the shutter clicks.

- Hash to Chain — That metadata is immutably stored on‑chain, creating an auditable receipt.

- Verify & Share — Anyone, anywhere, can inspect the receipt before trusting the asset.

It’s the digital equivalent of signing a canvas before the paint dries: simple, public, hard to fake.

A Human‑Centric Framework for Co‑existence

Tool‑making is humanity’s super‑power; tool‑stewardship must be its twin. We see four pillars:

- Transparency by Default — Label synthetic media, publish model lineage, open‑source the audit trail.

- Human in the Loop — Keep editors, scientists, and moderators as final arbiters in high‑stakes calls.

- Incentives for Integrity — Reward platforms and creators whose content carries verifiable provenance.

- Lifelong AI Literacy — Teach everyone, from grade‑schoolers to boardrooms, how to question, verify, and create responsibly.

None of this requires believing that AI is “good” or “bad.” It requires believing that we remain responsible for the worlds our tools make possible.

Help us co-create the full video.

Comment with a prompt that could extend the story, e.g., “Generate a newsroom scene where a reporter uses Numbers Capture to debunk a viral deepfake.”

We’ll prototype the most compelling ideas, publish them with full provenance receipts!